Necessary repetitive interactions don’t need to take as much time to type or brain space to remember

Photo by Christopher Gower on Unsplash

As an engineer, one of the most powerful tools you likely use in a day is your favorite command-line shell — this includes building, running, and testing projects, committing code, writing scripts, configuring environments, etc. These monotonous, repetitive interactions are necessary to our day-to-day work — but they don’t need to take as much time to type or brain space to remember. Building a custom CLI that wraps common commands or even something more sophisticated like a build script can be a huge productivity booster for your team.

What is a command-line interface (CLI)?

A CLI, or command-line interface, describes the process of interacting with a program via lines of text, usually sending commands and receiving informational output.

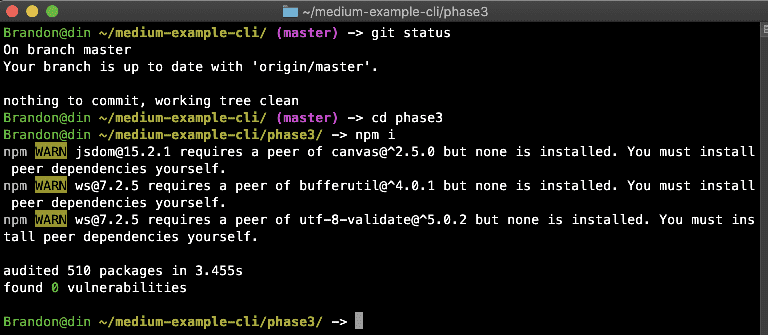

A screenshot of a terminal window running CLI commands

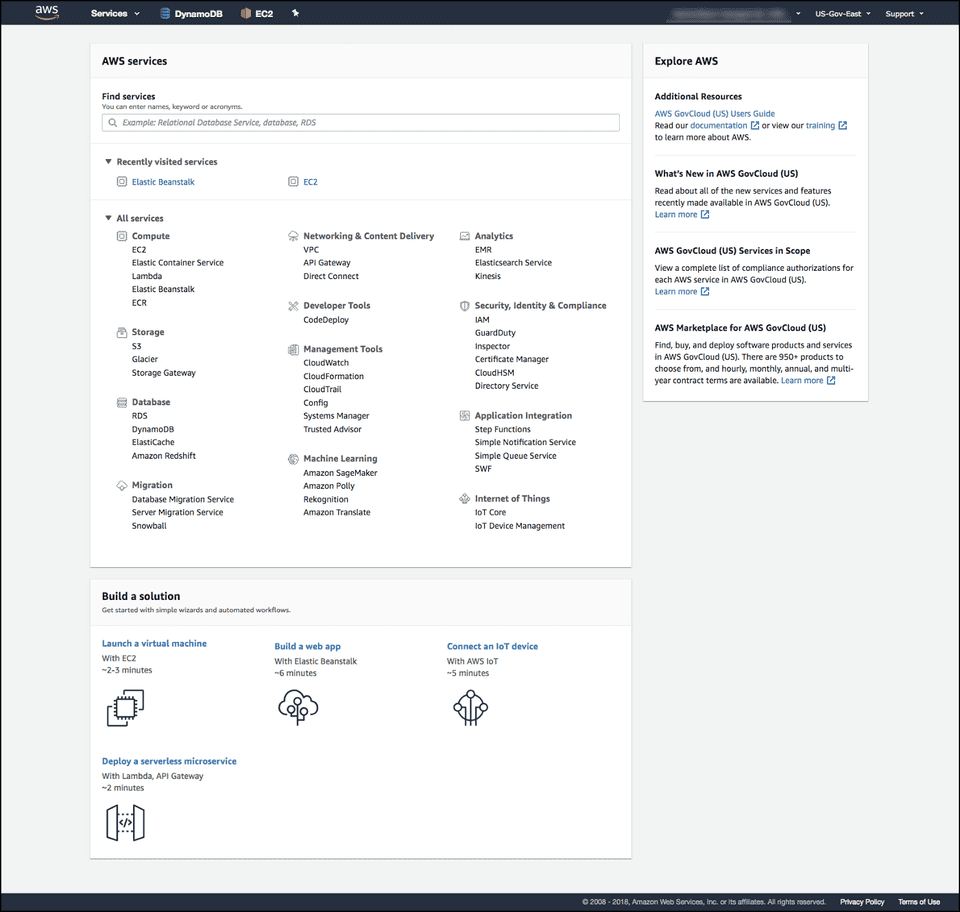

Nowadays, CLIs are often forgot about in favor of programs with GUIs, or graphical user interfaces. GUIs are a more visual way to interact with an application, usually built with buttons, sliders, dropdown menus, context menus, etc. What you may not know is that many applications with GUIs are also powered by a CLI, or at least offer a CLI with feature parity. Take Amazon Web Services for example — they offer a suite of web applications for spinning up cloud infrastructure in what they call the AWS Management Console.

Screenshot of the AWS Management Console landing page. Source: https://aws.amazon.com/about-aws/whats-new/2018/12/usability-improvements-for-aws-management-console/

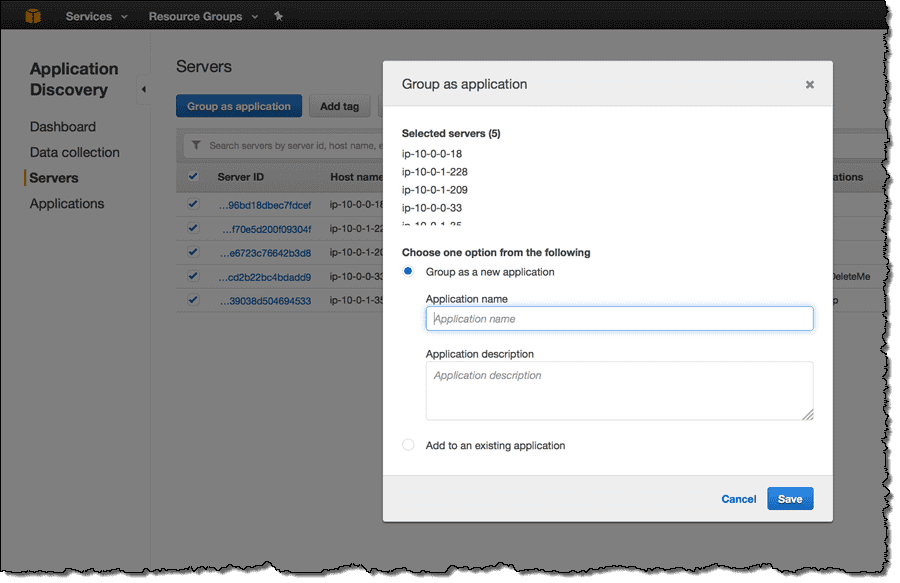

Screenshot of the AWS Application Discovery service with a settings modal open. Source: https://aws.amazon.com/blogs/aws/category/aws-management-console/

While many users will prefer to configure their services this way, others will prefer a simpler interface, one that can also be used to automate their workflows (such as powering down and spinning up new environments.) Amazon offers a CLI to interact with their services as well, which is well documented.

Why should your development team invest in a CLI?

Many engineers, myself included, visit the command-line regularly while developing. The projects I’m working on often have a back-end and front-end element which require separate processes to run, and occasionally other dependencies (Redis, Docker, etc.)

Each of these have their own CLIs which can be hard to remember exact commands, arguments, and optional flags, and where you need to be in relation to your directory structure for them to work as expected.

For example, if I want to create a new Entity Framework migration for my .NET Core project, I would need to be in the directory of the database layer of my solution, and pass the directory to the presentation layer of my solution to the command:

dotnet ef migrations add <migration name> --startup-project dotnet/api/Presentation/Web/Web.csprojNot only is this verbose to remember, but it requires changing directories, too. When I’m developing a feature and I need to create a database migration, all I really want or need to care about should be the name of the migration after I’ve made the necessary code changes.

Using the AndcultureCode.Cli that we built using ShellJS and Commander, the above command was reduced to:

and-cli migration -a <migration name>The command does the grunt work of checking to see if you’re in a valid .NET solution directory, finding the data project, and finding the presentation project. All you need to send it is a migration name.

While this may seem like a small gain at first glance, it takes the guesswork out of that process, and lets you get back to your primary work sooner. I’m willing to bet there are a number of situations like this that you encounter on a daily basis.

How do you build a CLI?

For building command-line applications, most people tend to use Bash, which is a shell that can execute commands from user input, or execute a series of commands from a file (called a shell script.) Bash is a natural first choice for many developers that work on Mac or Linux-based systems, since it is commonly the default shell when you open a new terminal instance.

Consider the following function which wraps the dotnet restore command and provides some additional output.

| #!/bin/bash | |

| DOTNET_SOLUTION_FILE_PATH=/home/brandon/projects/DotnetExample | |

| # Restores dotnet solution nuget dependencies | |

| restoreDotnetSolution() { | |

| echo"Running dotnet restore on the solution..." | |

| dotnet restore $DOTNET_SOLUTION_FILE_PATH | |

| echo"Dotnet solution restored" | |

| } |

This doesn’t seem too complicated, but it isn’t taking any arguments for input. The only variable is DOTNET_SOLUTION_FILE_PATH which is defined manually in the same file. We could pull that out into another function that looks for a common .NET Core project structure under the current directory so it is a bit more dynamic.

| #!/bin/bash | |

| DOTNET_SOLUTION_FILE_PATH="" | |

| # Checks three common locations under the current directory for a .sln file, or exits if one | |

| # is not found. If found, DOTNET_SOLUTION_FILE_PATH is set to the value. | |

| findDotnetSolution() { | |

| LS_RESULT=`ls *.sln` | |

| # If the exit status of that ls command is 0, it means at least one file matching the pattern exists. | |

| if [ $?-eq 0 ]; | |

| then | |

| echo"Dotnet solution found." | |

| DOTNET_SOLUTION_FILE_PATH=$LS_RESULT | |

| fi; | |

| LS_RESULT=`ls dotnet/*.sln` | |

| if [ $?-eq 0 ]; | |

| then | |

| echo"Dotnet solution found." | |

| DOTNET_SOLUTION_FILE_PATH=$LS_RESULT | |

| fi; | |

| LS_RESULT=`ls dotnet/*/*.sln` | |

| if [ $?-eq 0 ]; | |

| then | |

| echo"Dotnet solution found: $LS_RESULT" | |

| DOTNET_SOLUTION_FILE_PATH=$LS_RESULT | |

| fi; | |

| if [[ $DOTNET_SOLUTION_FILE_PATH="" ]]; | |

| then | |

| echo"No dotnet solution found. Exiting." | |

| exit 1; | |

| fi; | |

| } | |

| # Restores dotnet solution nuget dependencies | |

| restoreDotnetSolution() { | |

| findDotnetSolution | |

| echo"Running dotnet restore on the solution..." | |

| dotnet restore $DOTNET_SOLUTION_FILE_PATH | |

| echo"Dotnet solution restored" | |

| } |

Note: I don’t use bash scripts for advanced tasks, so take this example with a grain of salt.

This new function, findDotnetSolution, is responsible for finding a dotnet solution file (in the form of filename.sln) under the current directory. It uses the ls command to list the directory contents of the given path and checks the exit status of that command to determine whether or not matching files were found.

This solution removes some of the static configuration necessary for the script to work, but it’s still pretty ugly because it’s mostly duplicated code. Of course, Bash has a for loop concept like most other scripting and programming languages, so let’s rewrite it to loop over the paths where we expect a dotnet solution to be found.

| #!/bin/bash | |

| DOTNET_SOLUTION_FILE_PATH="" | |

| # Checks three common locations under the current directory for a .sln file, or exits if one | |

| # is not found. If found, DOTNET_SOLUTION_FILE_PATH is set to the value. | |

| findDotnetSolution() { | |

| forPATH_PATTERNin*.sln dotnet/*.sln dotnet/*/*.sln | |

| do | |

| LS_RESULT=`ls $PATH_PATTERN` | |

| # If the exit status of that ls command is 0, it means at least one file matching the pattern exists. | |

| if [ $?-eq 0 ]; | |

| then | |

| echo"Dotnet solution found." | |

| DOTNET_SOLUTION_FILE_PATH=$LS_RESULT | |

| fi; | |

| done | |

| if [[ $DOTNET_SOLUTION_FILE_PATH="" ]]; | |

| then | |

| echo"No dotnet solution found. Exiting." | |

| exit 1; | |

| fi; | |

| } | |

| # Restores dotnet solution nuget dependencies | |

| restoreDotnetSolution() { | |

| findDotnetSolution | |

| echo"Running dotnet restore on the solution..." | |

| dotnet restore $DOTNET_SOLUTION_FILE_PATH | |

| echo"Dotnet solution restored" | |

| } |

This solution is better — it removes the duplicated code above, but it will still likely confuse developers who do not regularly write Bash scripts. For one, what does $? mean? Why does the first if block use [ and ], whereas the second block uses [[ and ]]? Why can’t we just return the actual path from findDotnetSolution?

What is the difference between bash and other languages?

-

Functions cannot return a value, only an exit code. Think of each function as its own executable program: when you run and shutdown

dotnet, it does not return anything, just a numeric code representing the execution as a success or failure (generally 0 is a successful run/shutdown, and non-zero represents a failure.)- There are some ‘workarounds’ for this behavior, but I personally find them undesirable and more confusing.

- There are multiple ways to write an ‘if’ block or test a condition.

if, thetestcommand,[[condition]]and[ condition ]are all ways to ‘test’ a condition in Bash, but the double brackets support logical operators such as&&,||,<, and>while the single brackets do not. Technically, brackets are not necessary when writing anifblock. See the referenced documentation for further examples. - Variables are referenced with a preceding

$, whereas they are declared without one. If you noticeDOTNET_SOLUTION_PATHis declared early on without the$, but needs to be accessed with the$or the literal string “DOTNETSOLUTIONPATH” will be used. -

Bash has some built-in “special” variables such as

$?, which returns the exit value of last executed command.- I’m sure there are other languages with special variables, and some could argue language constants and enumerations could be considered special variables.

There’s more to cover, but these are some of the things I noticed when trying to remember how Bash worked to write examples for this post. It’s worth noting that this makes sense, and isn’t necessarily a bash on Bash: Bash is not a programming language, it is a command shell that can be used to write scripts. It can be useful for automating repetitive tasks you perform on the command line for creating files, changing directories, receiving and forwarding input from other programs or commands, etc. It shouldn’t be used as a general purpose programming language.

The examples up to this point are included in the phase1 directory of the example repository.

So how ELSE could you write one?

ShellJS is a node package that provides a cross-platform implementation of common shell commands, such as cd, ls, cat, and more. It is unit tested and best of all: it’s just JavaScript! It allows you to write modular, unit testable chunks of code in a language that you are likely already using in your project.

Take, for example, the command we created above that wraps dotnet restore.

| // ----------------------------------------------------------------------------------------- | |

| // #region Imports | |

| // ----------------------------------------------------------------------------------------- | |

| constshell=require("shelljs"); | |

| // #endregion Imports | |

| // ----------------------------------------------------------------------------------------- | |

| // #region Variables | |

| // ----------------------------------------------------------------------------------------- | |

| // Wild-card searches used when finding the solution file. Ordered by most to least performant | |

| constsolutionFilePaths=["*.sln","dotnet/*.sln","dotnet/*/*.sln"]; | |

| // #endregion Variables | |

| // ----------------------------------------------------------------------------------------- | |

| // #region Functions | |

| // ----------------------------------------------------------------------------------------- | |

| /** | |

| * Returns the first match of the provided file expression | |

| */ | |

| functiongetFirstFile(fileExpression){ | |

| constresult=shell.ls(fileExpression)[0]; | |

| returnresult; | |

| } | |

| /** | |

| * Retrieves the first dotnet solution file path from a list of common paths | |

| */ | |

| functiongetSolutionPath(){ | |

| letsolutionPath; | |

| for(varfilePathofsolutionFilePaths){ | |

| solutionPath=getFirstFile(filePath); | |

| if(solutionPath!==undefined){ | |

| returnsolutionPath; | |

| } | |

| } | |

| returnundefined; | |

| } | |

| /** | |

| * Calls dotnet restore on the solution path (if found). Exits with an error message | |

| * if the restore command returns a failure exit code. | |

| * | |

| */ | |

| functionrestoreDotnetSolution(){ | |

| constsolutionPath=solutionPathOrExit(); | |

| shell.echo("Running dotnet restore on the solution..."); | |

| constresult=shell.exec(`dotnet restore ${solutionPath}`); | |

| if(result.code!==0){ | |

| shell.echo("Error running dotnet restore!"); | |

| shell.exit(result.code); | |

| } | |

| shell.echo("Dotnet solution restored"); | |

| } | |

| /** | |

| * Retrieves the dotnet solution file path or exits if it isn't found | |

| */ | |

| functionsolutionPathOrExit(){ | |

| constsolutionPath=restoreDotnetSolutionModule.getSolutionPath(); | |

| if(solutionPath!==undefined){ | |

| returnsolutionPath; | |

| } | |

| shell.echo("Unable to find dotnet solution file"); | |

| shell.exit(1); | |

| } | |

| // #endregion Functions | |

| // ----------------------------------------------------------------------------------------- | |

| // #region Exports | |

| // ----------------------------------------------------------------------------------------- | |

| constrestoreDotnetSolutionModule={ | |

| getSolutionPath, | |

| getFirstFile, | |

| solutionPathOrExit, | |

| restoreDotnetSolution, | |

| }; | |

| module.exports=restoreDotnetSolutionModule; | |

| // #endregion Exports |

Note: There is another file not shown called ‘main.js’ (arbitrary) which acts as the entrypoint and calls restoreDotnetSolution().

This program is broken up in a very similar way to the Bash script:

- It loops over an array of common file patterns where a dotnet solution file might be found.

- It exits early if no solution file can be found.

- The solution path is set as a variable and passed along to the dotnet restore command.

While at first glance this example looks longer and more complex, it does have some advantages over Bash. For one, you can easily write unit tests to prove this functionality works.

| // ----------------------------------------------------------------------------------------- | |

| // #region Imports | |

| // ----------------------------------------------------------------------------------------- | |

| constfaker=require("faker"); | |

| constshell=require("shelljs"); | |

| constsut=require("./restore-dotnet-solution"); | |

| // #endregion Imports | |

| // ----------------------------------------------------------------------------------------- | |

| // #region Tests | |

| // ----------------------------------------------------------------------------------------- | |

| describe("restoreDotnetSolution",()=>{ | |

| afterEach(()=>{ | |

| // Clear out any mocked functions and restore their original implementations after each test | |

| jest.restoreAllMocks(); | |

| }); | |

| // ----------------------------------------------------------------------------------------- | |

| // #region getFirstFile | |

| // ----------------------------------------------------------------------------------------- | |

| describe("getFirstFile",()=>{ | |

| test("when more than 1 file is found, it returns the first one",()=>{ | |

| // Arrange | |

| jest.spyOn(shell,"ls").mockImplementation(()=>[ | |

| "file1.jpg", | |

| "file2.jpg", | |

| ]); | |

| // Act | |

| constresult=sut.getFirstFile("file");// <- What we pass in here is arbitrary, since we're mocking the ls return anyway. | |

| // Assert | |

| expect(result).toBe("file1.jpg"); | |

| }); | |

| }); | |

| // #endregion getFirstFile | |

| // ----------------------------------------------------------------------------------------- | |

| // #region getSolutionPath | |

| // ----------------------------------------------------------------------------------------- | |

| describe("getSolutionPath",()=>{ | |

| test("when no solution file is found, it returns undefined",()=>{ | |

| // Arrange & Act | |

| constresult=sut.getSolutionPath(); | |

| // Assert | |

| expect(result).not.toBeDefined(); | |

| }); | |

| test("when solution file is found, it returns that path",()=>{ | |

| // Arrange | |

| jest.spyOn(shell,"ls").mockImplementation(()=>["Example.sln"]); | |

| // Act | |

| constresult=sut.getSolutionPath(); | |

| // Assert | |

| expect(result).toBeDefined(); | |

| expect(result).toBe("Example.sln"); | |

| }); | |

| }); | |

| // #endregion getSolutionPath | |

| // ----------------------------------------------------------------------------------------- | |

| // #region solutionPathOrExit | |

| // ----------------------------------------------------------------------------------------- | |

| describe("solutionPathOrExit",()=>{ | |

| test("when no solution file is found, it returns calls shell.exit with exit code 1",()=>{ | |

| // Arrange | |

| constshellExitSpy=jest | |

| .spyOn(shell,"exit") | |

| .mockImplementation((code)=> | |

| console.log(`shell.exit was called with code: ${code}`) | |

| ); | |

| // Act | |

| sut.solutionPathOrExit(); | |

| // Assert | |

| expect(shellExitSpy).toBeCalledWith(1); | |

| }); | |

| }); | |

| // #endregion solutionPathOrExit | |

| // ----------------------------------------------------------------------------------------- | |

| // #region restoreDotnetSolution | |

| // ----------------------------------------------------------------------------------------- | |

| describe("restoreDotnetSolution",()=>{ | |

| test("when solutionPathOrExit fails to find the path, it calls shell.exit with exit code 1",()=>{ | |

| // Arrange | |

| jest.spyOn(sut,"solutionPathOrExit").mockImplementation(()=>{ | |

| shell.exit(1); | |

| }); | |

| constshellExitSpy=jest | |

| .spyOn(shell,"exit") | |

| .mockImplementation((code)=> | |

| console.log(`shell.exit was called with code: ${code}`) | |

| ); | |

| // Act | |

| sut.restoreDotnetSolution(); | |

| // Assert | |

| expect(shellExitSpy).toBeCalledWith(1); | |

| }); | |

| test("when shell.exec returns non-zero exit code, it calls shell.exit with that code",()=>{ | |

| // Arrange | |

| constexitCode=faker.random.number({min: 1,max: 50}); | |

| jest.spyOn(shell,"exec").mockImplementation(()=>{ | |

| return{code: exitCode}; | |

| }); | |

| constshellExitSpy=jest | |

| .spyOn(shell,"exit") | |

| .mockImplementation((code)=> | |

| console.log(`shell.exit was called with code: ${code}`) | |

| ); | |

| // Act | |

| sut.restoreDotnetSolution(); | |

| // Assert | |

| expect(shellExitSpy).toBeCalledWith(exitCode); | |

| }); | |

| }); | |

| // #endregion restoreDotnetSolution | |

| }); | |

| // #endregion Tests |

We are using Jest as our JS testing framework

Secondly, because this is just another module, you can import it elsewhere in your project to reuse the same code. Take this short example that restores the dotnet solution dependencies using the above code, and then builds the solution.

| // ----------------------------------------------------------------------------------------- | |

| // #region Imports | |

| // ----------------------------------------------------------------------------------------- | |

| constshell=require("shelljs"); | |

| const{ | |

| restoreDotnetSolution, | |

| solutionPathOrExit, | |

| }=require("./restore-dotnet-solution"); | |

| // #endregion Imports | |

| // ----------------------------------------------------------------------------------------- | |

| // #region Functions | |

| // ----------------------------------------------------------------------------------------- | |

| functionbuildDotnetSolution(){ | |

| // Check for the solution path before continuing. If we cannot find it here, the dotnet | |

| // restore will fail, but we're going to need the path later on anyway. | |

| constsolutionPath=solutionPathOrExit(); | |

| restoreDotnetSolution(); | |

| shell.echo("Running dotnet build on the solution..."); | |

| constresult=shell.exec(`dotnet build ${solutionPath} --no-restore`); | |

| if(result.code!==0){ | |

| shell.echo("Error running dotnet build!"); | |

| shell.exit(result.code); | |

| } | |

| shell.echo("Dotnet solution built"); | |

| } | |

| // #endregion Functions | |

| // ----------------------------------------------------------------------------------------- | |

| // #region Exports | |

| // ----------------------------------------------------------------------------------------- | |

| constbuildDotnetSolutionModule={ | |

| buildDotnetSolution, | |

| }; | |

| module.exports=buildDotnetSolutionModule; | |

| // #endregion Exports |

Note that while this code works, it would probably make more logical sense to break out some of the common functions (getFirstFile, getSolutionPath, and solutionPathOrExit) into their own module, since they aren’t directly tied to just restoring or just building the dotnet solution. By pulling them out into their own module, they are logically separated and can be tested independently.

The examples up to this point are included in the phase2 directory of the example repository.

Your custom CLI: building arguments, options, and documentation

If you’ve been following so far, perhaps you may have noticed that the current setup does not actually take any input from the user. As noted in a code sample above, there was a main.js file that called our function restoreDotnetSolution, but it didn’t pass any arguments along. The function knew how to find the solution, restore it, and check for errors.

If you’ve used any command line interface before, you’re likely accustomed to passing in certain argument or option flags to change the configuration on the command. For example, what if we wanted to pass an option to explicitly clean the build asset directories before restoring and building again? Something along these lines:

cli dotnet --clean --restore --buildUsing the Commander package, we will write a main entrypoint for our CLI program, as well as a sub-command file for running dotnet-related actions.

| #!/usr/bin/env node | |

| // ----------------------------------------------------------------------------------------- | |

| // #region Imports | |

| // ----------------------------------------------------------------------------------------- | |

| constprogram=require("commander"); | |

| // #endregion Imports | |

| // ----------------------------------------------------------------------------------------- | |

| // #region Entrypoint / Command router | |

| // ----------------------------------------------------------------------------------------- | |

| // Description to display to the user when running the cli without arguments | |

| program.description("Example cli written using ShellJS and Commander"); | |

| // Registers the dotnet command via 'cli-dotnet.js' in the same folder. Commands must be created | |

| // in a file prefixed with the name of this main file (ie, 'cli-<command>') | |

| program.command( | |

| "dotnet", | |

| "Run various dotnet test runner commands for the project" | |

| ); | |

| // Attempt to parse the arguments passed in and defer to subcommands, if any. | |

| // If no arguments are passed in, this will automatically display the help information for the user. | |

| program.parse(process.argv); | |

| // #endregion Entrypoint / Command router |

Right now, we only have one sub-command which is dotnet. If you had other sub-commands for interacting with Jest, Webpack, etc., you’d register them here. The second argument to command is the description of the command, which should be short but useful to a new user navigating the interface of your program. Next, you’d create a file prefixed with entrypoint name, ending with your command name, i.e. cli-dotnet.js. All sub-commands of your CLI program will follow this pattern, and its consistent structure makes it easy to find, add or update commands.

This new sub-command file, cli-dotnet.js, will be responsible for parsing arguments from the user and deferring execution to what we declared as “modules” earlier. Generally speaking, it is cleaner and easier to test business logic for these CLI commands when their functions are broken out and the CLI file acts as the layer of “glue” to pull them together.

| #!/usr/bin/env node | |

| // ----------------------------------------------------------------------------------------- | |

| // #region Imports | |

| // ----------------------------------------------------------------------------------------- | |

| constdotnetBuild=require("./_modules/dotnet-build"); | |

| constdotnetClean=require("./_modules/dotnet-clean"); | |

| constdotnetRestore=require("./_modules/dotnet-restore"); | |

| constprogram=require("commander"); | |

| // #endregion Imports | |

| // ----------------------------------------------------------------------------------------- | |

| // #region Entrypoint / Command router | |

| // ----------------------------------------------------------------------------------------- | |

| program | |

| .usage("option(s)") | |

| .description( | |

| `Run various commands for the dotnet project. Certain options can be chained together for specific behavior | |

| (--clean and --restore can be used in conjunction with --build).` | |

| ) | |

| .option("-b, --build",dotnetBuild.description()) | |

| .option("-c, --clean",dotnetClean.description()) | |

| .option("-R, --restore",dotnetRestore.description()) | |

| .parse(process.argv); | |

| // Only run dotnet clean on its own if we aren't building in the same command | |

| // Otherwise, that command will run the clean. | |

| if(!program.build&&program.clean){ | |

| dotnetClean.run(); | |

| } | |

| // Only run dotnet restore on its own if we aren't building in the same command | |

| // Otherwise, that command will run the restore. | |

| if(!program.build&&program.restore){ | |

| dotnetRestore.run(); | |

| } | |

| if(program.build){ | |

| dotnetBuild.run(program.clean,program.restore); | |

| } | |

| // If no options are passed in, output help | |

| if(process.argv.slice(2).length===0){ | |

| program.outputHelp(); | |

| } | |

| // #endregion Entrypoint / Command router |

As you can see, this file follows a similar structure to the main entrypoint, cli.js. Arguments from the user are registered as “options” with a “flag” string and an optional description (though it is recommended.) You can specify short flags (such as -b ), verbose flags (such as --build), or both (-b, --build) as valid ways to “call” the commands. The presence of those options from the user will set a boolean flag on the program object itself, where you can conditionally call the modules you’ve written and imported.

There’s some additional logic at the end of the file to check when “no arguments” are passed in (such as just typing cli dotnet), which will present the user with the help menu. (The user might not know to use the -h or --help flags to display the same information, which is a ‘self-documenting’ feature of Commander.)

| Usage: cli-dotnet option(s) | |

| Run various commands for the dotnet project. Certain options can be chained together for specific behavior (--clean and --restore can be used in conjunction with --build). | |

| Options: | |

| -b, --build Builds the dotnet project (via dotnet build dotnet/api/Api.sln --no-restore) | |

| -c, --clean Clean the dotnet solution from the root of the project (via dotnet clean dotnet/api) | |

| -R, --restore Restore the dotnet solution from the root of the project (via dotnet restore) | |

| -h, --help display help for command |

The examples up to this point are included in the phase3 directory of the example repository.

With that piece covered, you should have everything you need to get started building out CLI tools for your own team. I’ve included a full repository with each “phase” of this blog post, as well as a link to the CLI that the engineering team at andculture has built.

Resources and more examples

- View the source code for the AndcultureCode.Cli mentioned earlier

- Full repository containing the examples in this post

- ShellJS on npm

- Commander on npm

- Jest on npm